DanJohnson

New member

- Joined

- Aug 24, 2021

- Messages

- 5

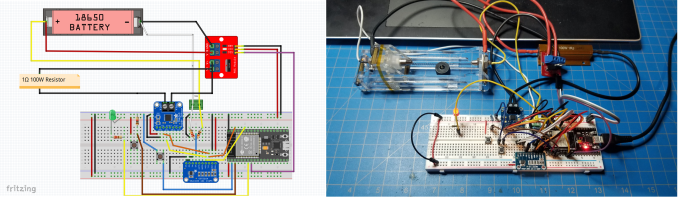

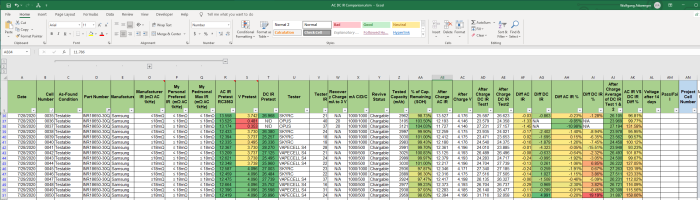

Hello, I'm a first semester graduate student in the USA and I have recently gotten super interested in Lithium Batteries! I have a ton of used 18650 cell's I've harvested from all sorts of products and while I was building an E-bike battery last year I was beating my head against the wall trying to test close to 200 cells using an OPUS BTC3100 and waiting hours to test 4 cells at a time. This gave me the idea to dedicate my research to finding a way to create a faster way to test used 18650 cells quickly and accurately without having 20 testers on a giant power supply and still spending hours at a time.

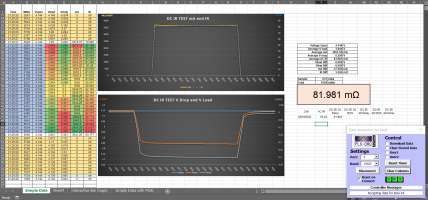

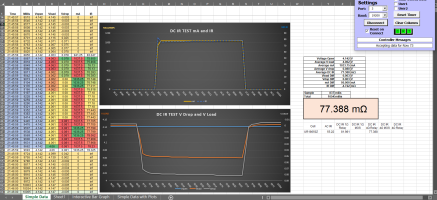

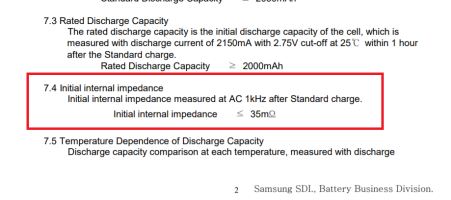

I was originally going to try and develop an internal resistance tester that uses electrochemical impedance spectroscopy (EIS) to instantly determine Internal resistance and save you the time of running charge discharge tests, but then I realized that was going to require a lot of expensive equipment that I most likely wouldn't be able to afford with my $1500 budget. I also just found this site and due to the amount of very knowledgeable and experienced professionals and hobbyists on here I figured y'all might have some ideas.

My goal is to find ways to make it easier for people to quicker way other than charge/discharge capacitance tests to accurately determine if a used 18650 cell is worth keeping or recycling. I see a huge amount of waste from used 18650 cells that still have a long usable second life, but due to difficulty in testing they are thrown out or collected by "recycling" companies but ultimately ending up in the landfill.

I would love to hear any input from this community about possible technologies to investigate, ideas for tools that would make testing used cells easier, gaps in the community knowledge that could benefit from formal research or really any suggestions.

I look forward to hearing from you all and I'm excited to have finally found the community I've been looking for!

Best,

Dan

I was originally going to try and develop an internal resistance tester that uses electrochemical impedance spectroscopy (EIS) to instantly determine Internal resistance and save you the time of running charge discharge tests, but then I realized that was going to require a lot of expensive equipment that I most likely wouldn't be able to afford with my $1500 budget. I also just found this site and due to the amount of very knowledgeable and experienced professionals and hobbyists on here I figured y'all might have some ideas.

My goal is to find ways to make it easier for people to quicker way other than charge/discharge capacitance tests to accurately determine if a used 18650 cell is worth keeping or recycling. I see a huge amount of waste from used 18650 cells that still have a long usable second life, but due to difficulty in testing they are thrown out or collected by "recycling" companies but ultimately ending up in the landfill.

I would love to hear any input from this community about possible technologies to investigate, ideas for tools that would make testing used cells easier, gaps in the community knowledge that could benefit from formal research or really any suggestions.

I look forward to hearing from you all and I'm excited to have finally found the community I've been looking for!

Best,

Dan